|

Listen to this article

|

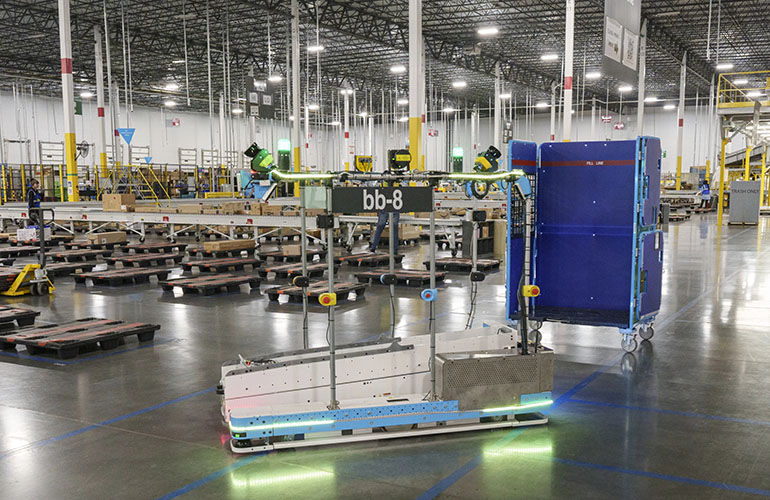

Amazon’s autonomous robot is being tested at some of its fulfillment facilities. | Source: Amazon

Amazon already has more than half a million robots working in its fulfillment centers every day. These robots perform a variety of tasks, like stocking inventory, filling orders and sorting packages, in areas with physical and virtual barriers than prevent them from interacting with human workers in the fulfillment center.

The robots are kept away from the busy fulfillment floors, where Amazon associates are constantly moving pallets across a crowded floor littered with pillars and other obstacles, to ensure workers are safe and to keep the robots moving quickly.

However, there are jobs on the fulfillment center floor, like moving the 10% of items ordered from the Amazon Store that are too long, wide or unwieldy to fit in the company’s pods or on its conveyor belts. These tasks require a robot that can use artificial intelligence and computer vision to navigate the chaotic facility floor without putting any workers at risk.

This robot would also need to be able to be integrated into Amazon’s current fulfillment centers seamlessly, without disrupting the tasks that Amazon associates perform every day.

“We don’t develop technology for technology’s sake,” Siddhartha Srinivasa, director of Amazon Robotics AI, said. “We want to develop technology with an end goal in mind of empowering our associates to perform their activities better and safer. If we don’t integrate seamlessly end-to-end, then people will not use our technology.”

Amazon is currently testing a few dozen robots that can do just that in some of its fulfillment centers. Amazon Robotics AI’s perception lead, Ben Kadlec, is leading the development of the AI for these robots, which have been deployed to preliminarily test whether the robot would be good at transporting non-conveyable items.

These robots have the ability to understand the three-dimensional structure of the world and how those structures distinguish each object in it. The robot can then understand how that object is going to behave based on its knowledge of the structure. This understanding, called semantic understanding or scene comprehension, along with LiDAR and camera data, allows the robot to be able to map its environment in real-time and make decisions on the fly.

“When the robot takes a picture of the world, it gets pixel values and depth measurements,” Lionel Gueguen, an Amazon Robotics AI machine learning applied scientist, said. “So, it knows at that distance, there are points in space — an obstacle of some sort. But that is the only knowledge the robot has without semantic understanding.”

Semantic understanding is all about teaching a robot to take a point in space and decide if that point is a person, a pod, a pillar, a forklift, another robot or any other object that could be in a fulfillment center. The robot then decides if that object is still or moving. It takes all of this information into account when calculating the best path to its destination.

Amazon’s team is currently working on predictive models that can help the robot better predict the paths of people and other moving objects that it encounters. They’re also working to help the robots how to best interact with humans.

“If the robot sneaks up on you really fast and hits the brake a millimeter before it touches you, that might be functionally safe, but not necessarily acceptable behavior,” Srinivasa said. “And so, there’s an interesting question around how do you generate behavior that is not only safe and fluent but also acceptable, that is also legible, which means that it’s human-understandable.”

Amazon’s roboticists hope that if they can launch a full-scale deployment of these autonomous robots, then they can apply what they’ve learned to other robots that perform different tasks. The company has already begun rolling out autonomous robots in its warehouses. Earlier this year, it unveiled its first autonomous mobile robot Proteus.

Tell Us What You Think!