|

Listen to this article

|

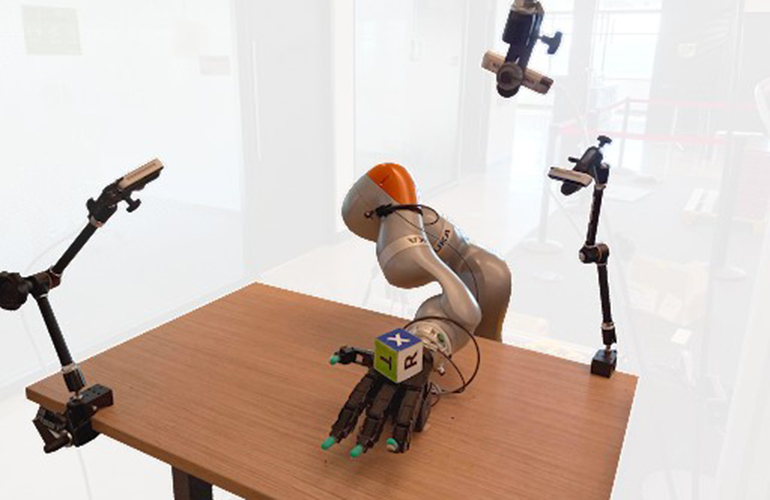

The setup for NVIDIA’s DeXtreme project using a Kuka robotic arm and an Allegro Hand. | Source: NVIDIA

Robotic hands are notoriously complex and difficult to control. The human hands they imitate consist of 27 different bones, 27 joints and 34 muscles, all working together to help us perform our daily tasks. Translating this process into robotics is more challenging than developing robots that use legs to walk, for example.

Methods typically used to teach robot control, like traditional methods with precisely pre-programmed grasps and motions or deep reinforcement learning (RL) techniques, fall short when it comes to operating a robotic hand.

Pre-programmed motions are too limited for the generalized tasks a robotic hand would ideally be able to perform, and deep RL techniques that train neural networks to control robot joints require millions, or billions, of real-world samples to learn from.

NVIDIA, instead, used its Isaac Gym RL robotics simulator to train an Allegro Hand, a lightweight, anthropomorphic robotic hand with three off-the-shelf cameras attached, as part of its DeXtreme project. The Isaac simulator is able to run more than simulations 10,000 times faster than the real world, according to the company, while still obeying the laws of physics.

With Isaac Gym, NVIDIA was able to teach the Allegro Hand to manipulate a cube and match provided target positions, orientations or poses. NVIDIA’s neural network brain learned to do all of this in simulation and then the team transplanted it to control a robot in the real world.

Training the neural network

In addition to its end-to-end simulation environment Isaac Gym, NVIDIA used its PhysX simulator, which simulates the world on the GPU that stays in the GPU memory while the deep learning control policy network is being trained, to train the hand.

Training in simulations provides a number of benefits for robotics. Besides NVIDIA’s ability to run simulations much faster than they would play out in the real world, robot hardware is prone to breaking after a lot of use.

According to NVIDIA, the team working with the hand often had to stop to repair the robotic hand, things like tightening screws, replacing ribbon cables and resting the hand to let it cool, after prolonged use. This makes it difficult to get the kind of training the robot needs in the real world.

To train the robot’s neural network, NVIDIA’s Omniverse Replicator generated around five million frames of synthetic data, meaning NVIDIA’s team didn’t have to use any real images. With NVIDIA’s training method, a neural network is trained using a technique called domain randomization, which changes lighting and camera positions to give the network more robust capabilities.

All of the training was done on a single Omniverse OVX server, and the system can teach a good policy in about 32 hours. According to NVIDIA, it would take a robot 42 years to get the same experience in the real world.

Tell Us What You Think!